Project Overview

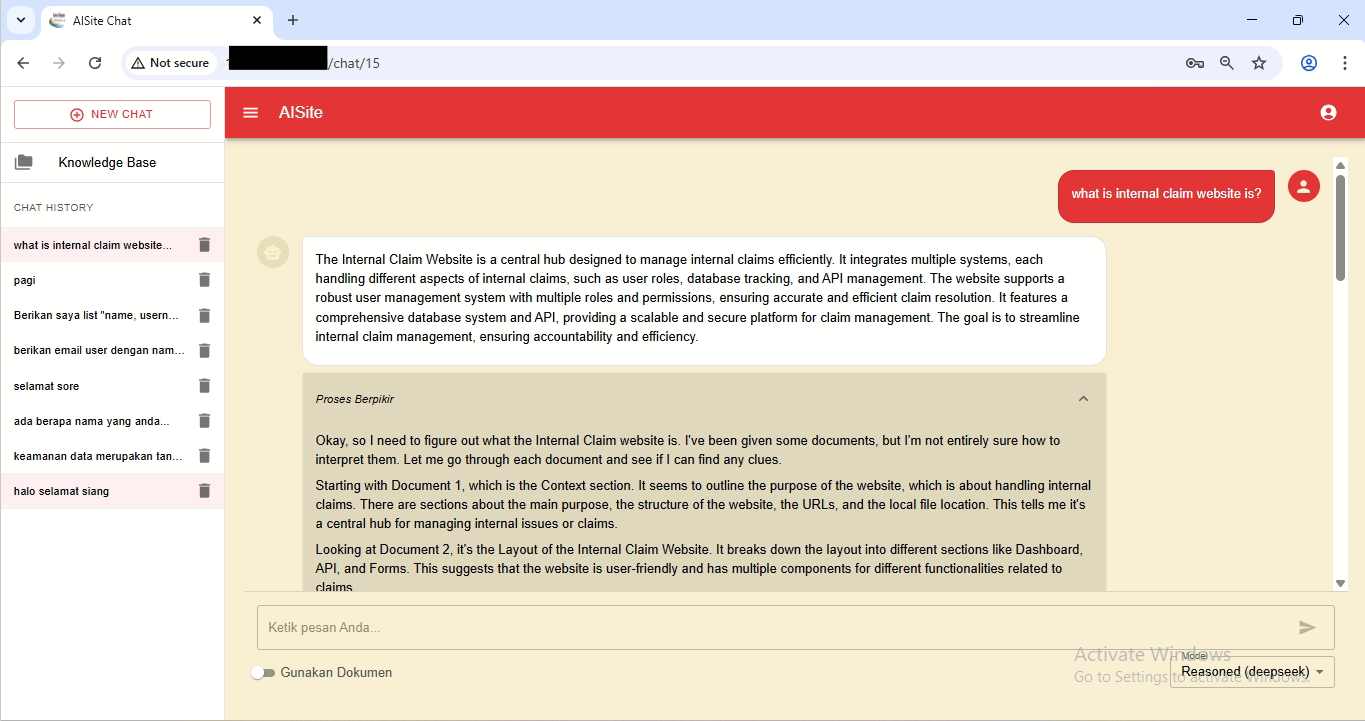

The AISite is a sophisticated, full-stack conversational AI platform designed to serve as an intelligent interface for an internal knowledge base. Its core purpose is to allow users to get fast and accurate answers from a private collection of documents (PDFs, DOCX, etc.) through an intuitive chat interaction, preventing the AI from hallucinating or providing information from outside its designated knowledge scope.

Core AI Technology: Retrieval-Augmented Generation (RAG)

The intelligence behind the chatbot is a meticulously designed RAG pipeline, which ensures that every answer is grounded in the provided documents. The process works as follows:

- Query Transformation: The user's raw question is first processed by a small, fast LLM (Llama 3.2 3B) to rephrase it into an optimized search query.

- Vector Retrieval: The optimized query is used to search a FAISS vector database, retrieving the 16 most semantically similar text chunks from the knowledge base.

- Relevance Gate: To reduce noise, a hybrid filter ensures only the most relevant chunks are used. The top-scoring chunk is always included, while the rest must pass a similarity threshold to proceed.

- Prompt Engineering: The filtered, relevant text chunks are compiled into a single context block, which is then combined with the user's original question to create a final, context-rich prompt.

- Answer Generation: This final prompt is sent to the main LLM (Llama 3.2 3B or Deepseek qween 1.5B), which is strictly instructed to formulate an answer based *only* on the provided context, saying "I don't know" if the information is not present.

- Streaming Response: The generated answer is streamed back to the user word-by-word, creating a responsive and dynamic chat experience.

Full-Stack Architecture

The application is built on a modern, decoupled architecture with three main components communicating via a REST API.

- Frontend: An interactive and responsive user interface built with Next.js, TypeScript, and Material-UI (MUI).

- Backend: A high-performance API server built with FastAPI (Python) and SQLAlchemy for business logic, AI processing, and database interactions.

- Database: Microsoft SQL Server is used for persistent data storage, including user information, chat history, and document metadata.

- Deployment: The entire stack is deployed on a Windows Server, with the Frontend and Backend processes managed as continuous services by NSSM, and unified under a single entry point using IIS as a reverse proxy.